I ran into a corrupt MariaDB index page the other day and had to restore my MythTV database from the automatic backups I make as part of my regular maintenance tasks.

Signs of trouble

My troubles started when my daily backup failed on this line:

mysqldump --opt mythconverg -umythtv -pPASSWORD > mythconverg-200200923T1117.sql

with this error message:

mysqldump: Error 1034: Index for table 'recordedseek' is corrupt; try to repair it when dumping table `recordedseek` at row: 4059895

Comparing the dump that was just created to the database dumps in

/var/backups/mythtv/, it was clear that it was incomplete since it was

about 100 MB smaller.

I first tried a gentle OPTIMIZE TABLE recordedseek as suggested in this

StackExchange

answer

but that caused the database to segfault:

mysqld[9141]: 2020-09-23 15:02:46 0 [ERROR] InnoDB: Database page corruption on disk or a failed file read of tablespace mythconverg/recordedseek page [page id: space=115871, page number=11373]. You may have to recover from a backup.

mysqld[9141]: 2020-09-23 15:02:46 0 [Note] InnoDB: Page dump in ascii and hex (16384 bytes):

mysqld[9141]: len 16384; hex 06177fa70000...

mysqld[9141]: C K c {\;

mysqld[9141]: InnoDB: End of page dump

mysqld[9141]: 2020-09-23 15:02:46 0 [Note] InnoDB: Uncompressed page, stored checksum in field1 102203303, calculated checksums for field1: crc32 806650270, innodb 1139779342, page type 17855 == INDEX.none 3735928559, stored checksum in field2 102203303, calculated checksums for field2: crc32 806650270, innodb 3322209073, none 3735928559, page LSN 148 2450029404, low 4 bytes of LSN at page end 2450029404, page number (if stored to page already) 11373, space id (if created with >= MySQL-4.1.1 and stored already) 115871

mysqld[9141]: 2020-09-23 15:02:46 0 [Note] InnoDB: Page may be an index page where index id is 697207

mysqld[9141]: 2020-09-23 15:02:46 0 [Note] InnoDB: Index 697207 is `PRIMARY` in table `mythconverg`.`recordedseek`

mysqld[9141]: 2020-09-23 15:02:46 0 [Note] InnoDB: It is also possible that your operating system has corrupted its own file cache and rebooting your computer removes the error. If the corrupt page is an index page. You can also try to fix the corruption by dumping, dropping, and reimporting the corrupt table. You can use CHECK TABLE to scan your table for corruption. Please refer to https://mariadb.com/kb/en/library/innodb-recovery-modes/ for information about forcing recovery.

mysqld[9141]: 200923 15:02:46 2020-09-23 15:02:46 0 [ERROR] InnoDB: Failed to read file './mythconverg/recordedseek.ibd' at offset 11373: Page read from tablespace is corrupted.

mysqld[9141]: [ERROR] mysqld got signal 11 ;

mysqld[9141]: Core pattern: |/lib/systemd/systemd-coredump %P %u %g %s %t 9223372036854775808 %h ...

kernel: [820233.893658] mysqld[9186]: segfault at 90 ip 0000557a229f6d90 sp 00007f69e82e2dc0 error 4 in mysqld[557a224ef000+803000]

kernel: [820233.893665] Code: c4 20 83 bd e4 eb ff ff 44 48 89 ...

systemd[1]: mariadb.service: Main process exited, code=killed, status=11/SEGV

systemd[1]: mariadb.service: Failed with result 'signal'.

systemd-coredump[9240]: Process 9141 (mysqld) of user 107 dumped core.#012#012Stack trace of thread 9186: ...

systemd[1]: mariadb.service: Service RestartSec=5s expired, scheduling restart.

systemd[1]: mariadb.service: Scheduled restart job, restart counter is at 1.

mysqld[9260]: 2020-09-23 15:02:52 0 [Warning] Could not increase number of max_open_files to more than 16364 (request: 32186)

mysqld[9260]: 2020-09-23 15:02:53 0 [Note] InnoDB: Starting crash recovery from checkpoint LSN=638234502026

...

mysqld[9260]: 2020-09-23 15:02:53 0 [Note] InnoDB: Recovered page [page id: space=115875, page number=5363] from the doublewrite buffer.

mysqld[9260]: 2020-09-23 15:02:53 0 [Note] InnoDB: Starting final batch to recover 2 pages from redo log.

mysqld[9260]: 2020-09-23 15:02:53 0 [Note] InnoDB: Waiting for purge to start

mysqld[9260]: 2020-09-23 15:02:53 0 [Note] Recovering after a crash using tc.log

mysqld[9260]: 2020-09-23 15:02:53 0 [Note] Starting crash recovery...

mysqld[9260]: 2020-09-23 15:02:53 0 [Note] Crash recovery finished.

and so I went with the nuclear option of dropping the MythTV database and restoring from backup.

Dropping the corrupt database

First of all, I shut down MythTV as root:

killall mythfrontend

systemctl stop mythtv-status.service

systemctl stop mythtv-backend.service

and took a full copy of my MariaDB databases just in case:

systemctl stop mariadb.service

cd /var/lib

apack /root/var-lib-mysql-20200923T1215.tgz mysql/

systemctl start mariadb.service

before dropping the MythTV databse (mythconverg):

$ mysql -pPASSWORD

MariaDB [(none)]> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| mythconverg |

| performance_schema |

+--------------------+

4 rows in set (0.000 sec)

MariaDB [(none)]> drop database mythconverg;

Query OK, 114 rows affected (25.564 sec)

MariaDB [(none)]> quit

Bye

Restoring from backup

Then I re-created an empty database:

mysql -pPASSWORD < /usr/share/mythtv/sql/mc.sql

and restored the last DB dump prior to the detection of the corruption:

sudo -i -u mythtv

/usr/share/mythtv/mythconverg_restore.pl --directory /var/backups/mythtv --filename mythconverg-1350-20200923010502.sql.gz

Now, it's time to check the database to confirm that it restored properly:

mysqlcheck -p --silent --extended mythconverg

In order to restart everything properly, I simply rebooted the machine:

systemctl reboot

Root cause

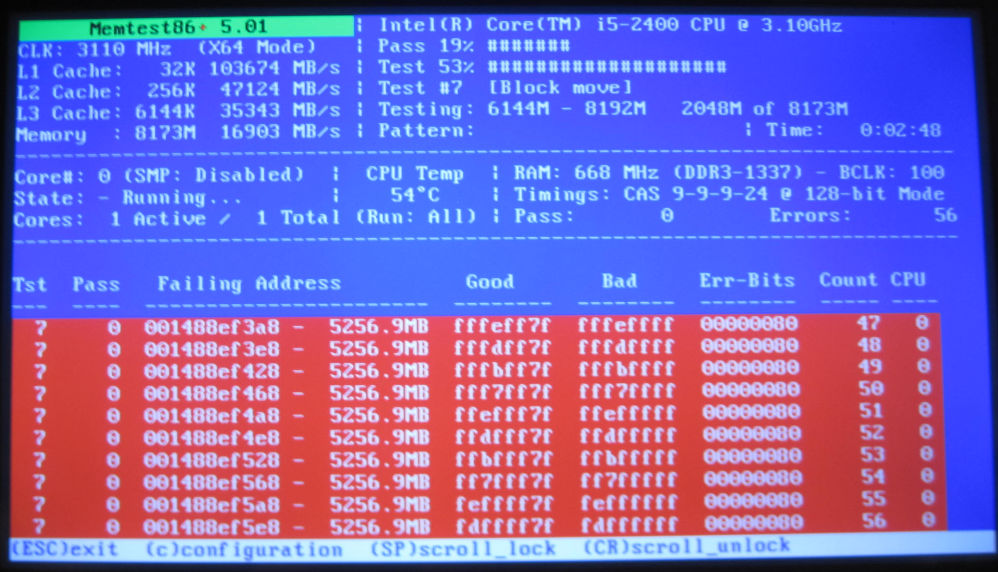

After walking through my recovery steps several times on the same computer, I finally figured out that the corruption was due to bad RAM.

I confirmed this theory by running memtest86+ overnight and got the following results:

I recently ran into a corrupted data pack in a Restic

backup on my

GnuBee. It led

to consistent failures during the prune operation:

incomplete pack file (will be removed): b45afb51749c0778de6a54942d62d361acf87b513c02c27fd2d32b730e174f2e

incomplete pack file (will be removed): c71452fa91413b49ea67e228c1afdc8d9343164d3c989ab48f3dd868641db113

incomplete pack file (will be removed): 10bf128be565a5dc4a46fc2fc5c18b12ed2e77899e7043b28ce6604e575d1463

incomplete pack file (will be removed): df282c9e64b225c2664dc6d89d1859af94f35936e87e5941cee99b8fbefd7620

incomplete pack file (will be removed): 1de20e74aac7ac239489e6767ec29822ffe52e1f2d7f61c3ec86e64e31984919

hash does not match id: want 8fac6efe99f2a103b0c9c57293a245f25aeac4146d0e07c2ab540d91f23d3bb5, got 2818331716e8a5dd64a610d1a4f85c970fd8ae92f891d64625beaaa6072e1b84

github.com/restic/restic/internal/repository.Repack

github.com/restic/restic/internal/repository/repack.go:37

main.pruneRepository

github.com/restic/restic/cmd/restic/cmd_prune.go:242

main.runPrune

github.com/restic/restic/cmd/restic/cmd_prune.go:62

main.glob..func19

github.com/restic/restic/cmd/restic/cmd_prune.go:27

github.com/spf13/cobra.(*Command).execute

github.com/spf13/cobra/command.go:838

github.com/spf13/cobra.(*Command).ExecuteC

github.com/spf13/cobra/command.go:943

github.com/spf13/cobra.(*Command).Execute

github.com/spf13/cobra/command.go:883

main.main

github.com/restic/restic/cmd/restic/main.go:86

runtime.main

runtime/proc.go:204

runtime.goexit

runtime/asm_amd64.s:1374

Thanks to the excellent support forum, I was able to resolve this issue by dropping a single snapshot.

First, I identified the snapshot which contained the offending pack:

$ restic -r sftp:hostname.local: find --pack 8fac6efe99f2a103b0c9c57293a245f25aeac4146d0e07c2ab540d91f23d3bb5

repository b0b0516c opened successfully, password is correct

Found blob 2beffa460d4e8ca4ee6bf56df279d1a858824f5cf6edc41a394499510aa5af9e

... in file /home/francois/.local/share/akregator/Archive/http___udd.debian.org_dmd_feed_

(tree 602b373abedca01f0b007fea17aa5ad2c8f4d11f1786dd06574068bf41e32020)

... in snapshot 5535dc9d (2020-06-30 08:34:41)

Then, I could simply drop that snapshot:

$ restic -r sftp:hostname.local: forget 5535dc9d

repository b0b0516c opened successfully, password is correct

[0:00] 100.00% 1 / 1 files deleted

and run the prune command to remove the snapshot, as well as the incomplete

packs that were also mentioned in the above output but could never be

removed due to the other error:

$ restic -r sftp:hostname.local: prune

repository b0b0516c opened successfully, password is correct

counting files in repo

building new index for repo

[20:11] 100.00% 77439 / 77439 packs

incomplete pack file (will be removed): b45afb51749c0778de6a54942d62d361acf87b513c02c27fd2d32b730e174f2e

incomplete pack file (will be removed): c71452fa91413b49ea67e228c1afdc8d9343164d3c989ab48f3dd868641db113

incomplete pack file (will be removed): 10bf128be565a5dc4a46fc2fc5c18b12ed2e77899e7043b28ce6604e575d1463

incomplete pack file (will be removed): df282c9e64b225c2664dc6d89d1859af94f35936e87e5941cee99b8fbefd7620

incomplete pack file (will be removed): 1de20e74aac7ac239489e6767ec29822ffe52e1f2d7f61c3ec86e64e31984919

repository contains 77434 packs (2384522 blobs) with 367.648 GiB

processed 2384522 blobs: 1165510 duplicate blobs, 47.331 GiB duplicate

load all snapshots

find data that is still in use for 15 snapshots

[1:11] 100.00% 15 / 15 snapshots

found 1006062 of 2384522 data blobs still in use, removing 1378460 blobs

will remove 5 invalid files

will delete 13728 packs and rewrite 15140 packs, this frees 142.285 GiB

[4:58:20] 100.00% 15140 / 15140 packs rewritten

counting files in repo

[18:58] 100.00% 50164 / 50164 packs

finding old index files

saved new indexes as [340cb68f 91ff77ef ee21a086 3e5fa853 084b5d4b 3b8d5b7a d5c385b4 5eff0be3 2cebb212 5e0d9244 29a36849 8251dcee 85db6fa2 29ed23f6 fb306aba 6ee289eb 0a74829d]

remove 190 old index files

[0:00] 100.00% 190 / 190 files deleted

remove 28868 old packs

[1:23] 100.00% 28868 / 28868 files deleted

done

Recovering from a corrupt pack

I ran into this problem a second time:

hash does not match id: want 4f0d26ae93d48ae9a274b0802c208fa47dcc2f97393378d63c208dd8dbcdf176, got 4f1eeb7ed1423358d4c579e641fe40a6340565c64f2df96ac19d28714a769806

but in that case dropping a single snapshot is not an option because the invalid pack is used in every snapshot!

Digging more into this problem, I realized I could trigger the error by requesting this pack specifically:

$ restic -r sftp:hostname.local: cat pack 4f0d26ae93d48ae9a274b0802c208fa47dcc2f97393378d63c208dd8dbcdf176 | sha256sum

Warning: hash of data does not match ID, want

4f0d26ae93d48ae9a274b0802c208fa47dcc2f97393378d63c208dd8dbcdf176

got:

4f1eeb7ed1423358d4c579e641fe40a6340565c64f2df96ac19d28714a769806

4f1eeb7ed1423358d4c579e641fe40a6340565c64f2df96ac19d28714a769806 -

If I ssh into my backup server and look at the pack file directly, I can see that the contents of it do not match its name (the name is supposed to be the hash of the contents):

$ sha256sum /mnt/data/home/machine1/data/4f/4f0d26ae93d48ae9a274b0802c208fa47dcc2f97393378d63c208dd8dbcdf176

4f1eeb7ed1423358d4c579e641fe40a6340565c64f2df96ac19d28714a769806 /mnt/data/home/machine1/data/4f/4f0d26ae93d48ae9a274b0802c208fa47dcc2f97393378d63c208dd8dbcdf176

After looking at the underlying file that is involved in this data corruption:

$ restic -r sftp:hostname.local: find --pack 4f0d26ae93d48ae9a274b0802c208fa47dcc2f97393378d63c208dd8dbcdf176

Found blob aec3a1b637a09f173652d509c1f39b8248deeba83d6c74ca99749676d7a4fb75

... in file /mnt/pub/video.mkv

(tree 7b83ceeb0cadb35a6e1103ebb37152e9b1338469fbc782256b95c0ddb6d4cc4e)

... in snapshot b0c7a69f (2021-04-16 09:41:47)

I decided that I didn't care about potentially losing it and worked around the invalid filename by renaming the pack (on the backup server) to its current hash:

$ cd /mnt/data/home/machine1/data/4f/

$ mv 4f0d26ae93d48ae9a274b0802c208fa47dcc2f97393378d63c208dd8dbcdf176 4f1eeb7ed1423358d4c579e641fe40a6340565c64f2df96ac19d28714a769806

That didn't work and made restic prune fail.

Since I still have the original video file, I figured I could just delete the pack and then trigger a new backup to re-upload it:

$ cd /mnt/data/home/machine1/data/4f/

$ rm 4f0d26ae93d48ae9a274b0802c208fa47dcc2f97393378d63c208dd8dbcdf176

$ restic -r sftp:hostname.local: backup --force

repository 1e424ff8 opened successfully, password is correct

Files: 620161 new, 0 changed, 0 unmodified

Dirs: 0 new, 0 changed, 0 unmodified

Added to the repo: 392.716 MiB

processed 620161 files, 1.637 TiB in 2:02:23

snapshot 710af622 saved

After doing that, I was able to run restic prune successfully and a simple

check passed:

$ restic -r sftp:hostname.local: check

repository 1e424ff8 opened successfully, password is correct

created new cache in /var/tmp/restic-check-tmp/restic-check-cache-878723178

create exclusive lock for repository

load indexes

check all packs

check snapshots, trees and blobs

no errors were found

but a more thorough check exposed another corrupt pack:

$ restic -r sftp:hostname.local: check

using temporary cache in /var/tmp/restic-check-tmp/restic-check-cache-878723178

repository 1e424ff8 opened successfully, password is correct

created new cache in /var/tmp/restic-check-tmp/restic-check-cache-878723178

create exclusive lock for repository

load indexes

check all packs

check snapshots, trees and blobs

no errors were found

$ restic -r sftp:hostname.local: check --read-data

using temporary cache in /var/tmp/restic-check-tmp/restic-check-cache-502831432

repository 1e424ff8 opened successfully, password is correct

created new cache in /var/tmp/restic-check-tmp/restic-check-cache-502831432

create exclusive lock for repository

load indexes

check all packs

check snapshots, trees and blobs

read all data

Pack ID does not match, want b6c9bd10, got 89dc2d85

[9:30:09] 100.00% 385573 / 385573 items

duration: 9:30:09

Fatal: repository contains errors

I removed that one too:

$ cd /mnt/data/home/machine1/data/b6/

$ rm b6c9bd10c68347d6bb76328d4bcb4b07c5dbf4f0b9317a4268844ea6c8b0b179

$ restic -r sftp:hostname.local: backup --force

repository 1e424ff8 opened successfully, password is correct

Files: 620209 new, 0 changed, 0 unmodified

Dirs: 0 new, 0 changed, 0 unmodified

Added to the repo: 12.156 GiB

processed 620209 files, 1.655 TiB in 2:10:10

snapshot b63f6890 saved

but this time pruning didn't work:

$ restic -r sftp:hostname.local: check --read-data

using temporary cache in /var/tmp/restic-check-tmp/restic-check-cache-315342197

repository 1e424ff8 opened successfully, password is correct

created new cache in /var/tmp/restic-check-tmp/restic-check-cache-315342197

create exclusive lock for repository

load indexes

check all packs

pack b6c9bd10: does not exist

check snapshots, trees and blobs

read all data

Load(<data/b6c9bd10c6>, 0, 0) returned error, retrying after 458.926983ms: file does not exist

Load(<data/b6c9bd10c6>, 0, 0) returned error, retrying after 952.879588ms: file does not exist

Load(<data/b6c9bd10c6>, 0, 0) returned error, retrying after 1.512969815s: file does not exist

Load(<data/b6c9bd10c6>, 0, 0) returned error, retrying after 1.345233563s: file does not exist

Load(<data/b6c9bd10c6>, 0, 0) returned error, retrying after 2.502610537s: file does not exist

Load(<data/b6c9bd10c6>, 0, 0) returned error, retrying after 2.391042907s: file does not exist

Load(<data/b6c9bd10c6>, 0, 0) returned error, retrying after 4.49847416s: file does not exist

Load(<data/b6c9bd10c6>, 0, 0) returned error, retrying after 4.678015434s: file does not exist

Load(<data/b6c9bd10c6>, 0, 0) returned error, retrying after 19.187660462s: file does not exist

Load(<data/b6c9bd10c6>, 0, 0) returned error, retrying after 14.884802036s: file does not exist

checkPack: Load: file does not exist

[9:36:58] 100.00% 388034 / 388034 items

duration: 9:36:58

Fatal: repository contains errors

$ restic -r sftp:hostname.local: prune

repository 1e424ff8 opened successfully, password is correct

counting files in repo

building new index for repo

[1:42:28] 100.00% 388033 / 388033 packs

repository contains 388033 packs (2638525 blobs) with 1.838 TiB

processed 2638525 blobs: 0 duplicate blobs, 0 B duplicate

load all snapshots

find data that is still in use for 23 snapshots

[1:38] 100.00% 23 / 23 snapshots

Fatal: number of used blobs is larger than number of available blobs!

Please report this error (along with the output of the 'prune' run) at

https://github.com/restic/restic/issues/new

So, as suggested on the restic issue tracker, I rebuilt the index:

$ restic -r sftp:hostname.local: rebuid-index

repository 1e424ff8 opened successfully, password is correct

counting files in repo

[44:35] 100.00% 395104 / 395104 packs

finding old index files

saved new indexes as [9a17c926 933252b2 2ef59ca2 679d4819 2661f12e 771b2013 d532cd44 55b4f8ab 5a3e6b2a 7b75e860 13c15b59 60d3266e 1fbe4dc5 f3b2396a c9debfa0 5ea78678 4e74f3ef bb9e99d9 9e335e0a 700a625d db9177b7 0a80aa31 9cbc436e 33211891 bfa29354 b67bc0f9 dfdda084 bc92aba3 c1dff125 652c4185 8c010d90 5c87bbfc fc4ba6f1 c0a7396e c54917ec 6aa26645 691cf977 f91223d3 cf9ff525 c1441550 ef71cb97 978192b2 40b416e9 31373aae 01bcade9 6f8b98bb 3e15ba5f d62b68c7 c92c5277 4270bae1 96822d59 cd45d864 d7830dad d6eae2a0 68cc1f1c 8501c6b7 fb95ce78 50479d33 e3afbbc9 f83fbfb6 097fd285 4d1ad340 b2a9b7ce 80add534 9cee064d 1cc5bbe3 7d40526b 334aecc9 952f8ed5 89d830b2 89e0c097 f3a3abf6 3c88ac9e c8cae3e5 1dc35cd3 3d70ba93 c59da9ee 2c19a371 5eed964c 1191bfee fa0e0c31 f79af9bb 214916f7 55cd25c4 03c5550f 8c36e374 7b0d2307 5c1c089a d62c5d18 d5c19d53 2e5f8647 27b5e5cf 87e164ff 0c9a7374 b2fa9e91 8781539c 1f130615 92396e28 1b630ee2 ecdb6b3c aae6de03 65fe2b9d 245d0258 6c446e2f 4f53e49e c6dba856 129c066d af0cb9e4 562adf6d eec351a0 6b9d2810 8d2b1aaf 69700877 4adff4e4 3ad2f960 79c8c084 fdccfa7a a0a1ef23 6ec37846 0b4f2199 8c2dc492 c304aeef d2fb56f3 30a0b5dd 20b01d0b c5bd22ad e96e0e72 73d39d25 57329b10 e9cfb1ef 685611e6 ce291336]

remove 132 old index files

and performed a forced backup:

$ restic -r sftp:hostname.local: backup --force

repository 1e424ff8 opened successfully, password is correct

Files: 620136 new, 0 changed, 0 unmodified

Dirs: 0 new, 0 changed, 0 unmodified

Added to the repo: 1.034 GiB

processed 620136 files, 1.612 TiB in 2:00:40

snapshot a15ee906 saved

followed by a prune:

$ restic -r sftp:hostname.local: prune

repository 1e424ff8 opened successfully, password is correct

counting files in repo

building new index for repo

[48:19] 100.00% 395322 / 395322 packs

repository contains 395322 packs (2664883 blobs) with 1.873 TiB

processed 2664883 blobs: 0 duplicate blobs, 0 B duplicate

load all snapshots

find data that is still in use for 25 snapshots

[1:40] 100.00% 25 / 25 snapshots

found 2664883 of 2664883 data blobs still in use, removing 0 blobs

will remove 0 invalid files

will delete 0 packs and rewrite 0 packs, this frees 0 B

counting files in repo

[10:14] 100.00% 395322 / 395322 packs

finding old index files

saved new indexes as [3304a0b5 040cac48 c38f9c4d 1619862d 2c314f95 c9a1c845 3f836327 4f2830a5 9e9a9806 3040f9bd 9e1b0589 146f395c 27d91277 08616709 38757c70 15093193 103ba3e1 e6cbe636 55fcbe20 92c12833 8a212821 a278e6eb 467328be 4b29f8c4 934de2a2 12d3f314 01c08b76 7101de54 afc8979b 18395477 48d140ef 48b48906 ee339513 742eea48 0d54eeb5 7dfbad62 54bdd724 33511da3 8b2628fd d566581d 0272fbf6 0510755d 64a39724 d4a51c31 432b0019 45469c45 c28d99fc c2329a41 c4dc9880 d52fdb9e 00b2c975 d791f871 c5e37a0e 1c3f7f40 008dda03 70cce88c e2bdef19 980839da a8388aad 9f2fdfc7 3f81fb07 20ec25f3 f3b0e273 8adfe021 5cb21bf4 f9664745 e81e0dc1 bdf674b3 4b43311f f576ee7b 6ff78a24 050d0d7a 079dda00 5a92ee95 c7a73677 42269868 c54be3e7 96a8339d 4fac763a aa859ad2 0282a555 d8145fdc 7fb9cae8 0b88bb4f 4ba5cdf5 6f4bc3ad 040580df fd5e0594 fe642426 58839033 0044dba1 73369fba d1d574aa 97833dff 469993c4 d6e89ba7 1e7378d3 3c5ce4a8 634c33b3 85db6047 2902f128 5c874b86 fa13fa7a 0e3319d6 7b5e5b57 41f864cf 72646b83 f3e19e87 cb28793a e2d3d8d5 42d7fac3 e5cc116f 5cb8d048 e5b54e10 1d3c59eb a5343bd8 9a11b3d9 5d607d3a c64ae7d5 84043ae5 ab28156d dbc777ba 870a4333 7bd74995 e21d054d 1983d216 c89603bd e767acff 2eb43682 44f44f58 9505e382 bb180450]

remove 136 old index files

done

That finally resolved the problems:

$ restic -r sftp:hostname.local: check --read-data

using temporary cache in /var/tmp/restic-check-tmp/restic-check-cache-120208924

repository 1e424ff8 opened successfully, password is correct

created new cache in /var/tmp/restic-check-tmp/restic-check-cache-120208924

create exclusive lock for repository

load indexes

check all packs

check snapshots, trees and blobs

read all data

[10:06:21] 100.00% 395322 / 395322 items

duration: 10:06:21

no errors were found

$ restic -r sftp:hostname.local: prune

repository 1e424ff8 opened successfully, password is correct

counting files in repo

building new index for repo

[1:46:03] 100.00% 395322 / 395322 packs

repository contains 395322 packs (2664883 blobs) with 1.873 TiB

processed 2664883 blobs: 0 duplicate blobs, 0 B duplicate

load all snapshots

find data that is still in use for 25 snapshots

[1:40] 100.00% 25 / 25 snapshots

found 2664883 of 2664883 data blobs still in use, removing 0 blobs

will remove 0 invalid files

will delete 0 packs and rewrite 0 packs, this frees 0 B

counting files in repo

[51:25] 100.00% 395322 / 395322 packs

finding old index files

saved new indexes as [625949a2 ab90eb8e 14b9628f b167409c f7ec7fcd 6b4c8610 bbe52582 71bfc8a6 3cba9ff0 1c7bde59 a7964e22 06b80d66 14c809a9 7b86e517 d99947de 2210743d 4491e925 bce9c547 b6f6d858 8c5971a1 55e33c29 f7ffa1d4 4cc2840d ce1abd72 b060fa02 d2fd09ff f4a0131b 374a9189 2dccd29a 1945cd5f c7ee7206 3d04a42d f438b047 452db6f1 27c963d7 f58fbd51 96b1f61e a9a0973d ba4d6703 e678c54c 51302c74 afbb4bac 8751b54e 94473541 d9b32531 60df7481 8106fd0c 61253b21 2e875c92 7df95dd3 0adf9c74 88170e77 91792c60 0ea4e491 39b94739 11c1fe4b 43b126ca cc735f84 27b9b442 081e8ab0 48e93444 1b0fa84c ffbfd282 838e6e98 84a12734 c2e4b6cf 6e175d0a 43998700 eb0a3082 05a82e0d 0ebca5a7 8a0007ce 2c726d2f 04b69cc9 f6ba1902 76e56673 9b0deb48 5a847d01 d0aaa74b 20fe5986 5467b907 b6f5bafc 53117b38 ae52eb33 fa327a6e 219a650c 37f52f15 8d15e4d8 b3c30a8c 38b23a1f 1a8c3cc5 2d7f8cb5 d051dae6 40e95005 a37e5883 aa541d2f de31889c 55ce512c 9c754673 aa1e62f6 8c11b17e 98c21930 c4425cef 295f092b daa08873 71c296e4 7ca9c531 43d3ead3 e397f19a 963e4a9e 531fd7c4 e68f7ee8 6e1c2dd8 8ee249fc 02068c0f 8ffe124f 6b2469bd 92aae0d2 464e119f b49edd74 feddbd73 a2bfe6ea cca616ce f30e6c1d 0614837e 120477e1 d39fe0f2 52ed1a31 6343369d 362e7a30 9039009b f6499019]

remove 132 old index files

done

In other words, if dropping a single snapshot is not an option and you still have the original file, then try deleting the corrupt pack and then running:

restic rebuild-index

restic backup --force

restic prune

Note that if you are using newer version of restic which automatically

heals repos (i.e. 0.12.0 or

later), you may not need to use the --force option with the backup

command.